Shout out to those hard-working generative adversarial network (GAN)! Ok, I don’t really know about hard-working, but it is pretty cool. Let’s review.

Intro to GAN

GAN technology came out in internally at Nvidia, the computer graphics company, in 2014 and released publicly in 2018 (as StyleGAN). The studies are linked.

I’m not going to pretend I understand the details of either study. But based on the Wikipedia article linked above, what I can explain is that a GAN is the result of two neural networks that compete against each other, in one of those mathematical, strategy “games” researchers like to play. The networks study photographs (or text) and then decide which elements to use to generate something new.

I think some people will find it creepy, but I think it’s cool. For the human photos, it’s hard to not project a humanity into the faces, even though, logically, these are people that have never existed. The generated fake people seem, somehow….special.

Here’s a quick intro to some GAN and some links to explore. Enjoy!

Explore Some Fake Stuff

As I said above, one of the possible outputs of a GAN can be a surprisingly realistic looking photographs. View some of them at thispersondoesnotexist.com. Every time you refresh, you get a new person that has never existed. It’s like dreaming for computers.

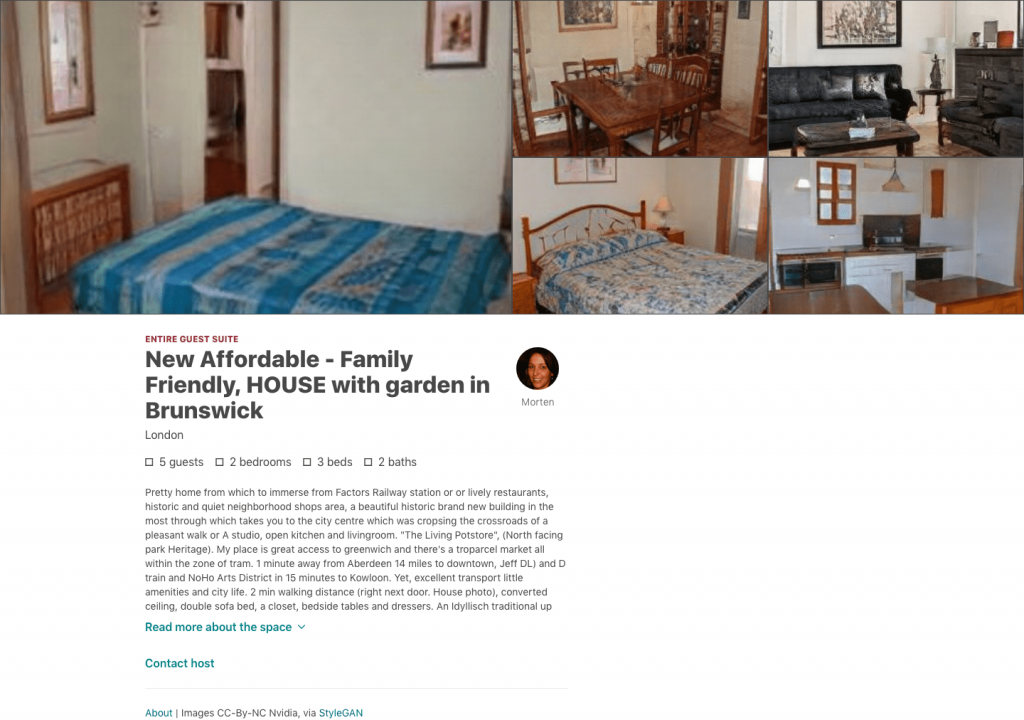

This github repository has a list of a few more websites of GANs that generate photographs of humans, fake rental ads, articles, anime, and more results that don’t exist. There are fake cats, but computers seem to have trouble with animals. I suggest not looking at them. (They’re creepy.)

Test Yourself, Human!

Some of these faces look pretty realistic. For instance, the image above-left is an “image of a young woman generated by StyleGAN, an generative adversarial network (GAN). The person in this photo does not exist, but is generated by an artificial intelligence based on an analysis of portraits.” The ad on the right is fake, too. Could you tell?

If you want to really test yourself, the website Which Face Is Real throws up 2 side-by-side images.

Unless you’re a dog cat on the internet, you can probably tell the difference (and probably even especially if you are a cat). The fake images have a few tell-tale signs like smudged backgrounds, odd looking teeth, unusual wrinkles, and some of them just don’t seem right.

You can also test yourself with the fake poems at Bot Poet.

Offshoots

Composites – There are some offshoots of the original technology, like this github repository which shows an example of a composite generated image using a StyleGAN image + an image of the Mona Lisa.

Digital “models” – I wouldn’t say these examples are quite the same, but there are already digital “models” on Instagram and in advertising campaigns. Lil Miquela has over 1.5 million followers on Instagram, and there’s a modeling agency specializing in digital “models”.

Video – The one below is uses around 8 frames of video to train their neural network, resulting in a real-time talking head model. It could be like one of those “deep-fake” videos, except the new heads are people that don’t exist. And you can kind of tell, if you watch the video below, human, the new heads do not really look too realistic (yet).

Few-Shot Adversarial Learning of Realistic Neural Talking Head Models

Statement regarding the purpose and effect of the technology

(NB: this statement reflects personal opinions of the authors and not of their organizations)

We believe that telepresence technologies in AR, VR and other media are to transform the world in the not-so-distant future. Shifting a part of human life-like communication to the virtual and augmented worlds will have several positive effects. It will lead to a reduction in long-distance travel and short-distance commute. It will democratize education, and improve the quality of life for people with disabilities. It will distribute jobs more fairly and uniformly around the World. It will better connect relatives and friends separated by distance. To achieve all these effects, we need to make human communication in AR and VR as realistic and compelling as possible, and the creation of photorealistic avatars is one (small) step towards this future. In other words, in future telepresence systems, people will need to be represented by the realistic semblances of themselves, and creating such avatars should be easy for the users. This application and scientific curiosity is what drives the research in our group, including the project presented in this video.

We realize that our technology can have a negative use for the so-called “deepfake” videos. However, it is important to realize, that Hollywood has been making fake videos (aka “special effects”) for a century, and deep networks with similar capabilities have been available for the past several years (see links in the paper). Our work (and quite a few parallel works) will lead to the democratization of the certain special effects technologies. And the democratization of the technologies has always had negative effects. Democratizing sound editing tools lead to the rise of pranksters and fake audios, democratizing video recording lead to the appearance of footage taken without consent. In each of the past cases, the net effect of democratization on the World has been positive, and mechanisms for stemming the negative effects have been developed. We believe that the case of neural avatar technology will be no different. Our belief is supported by the ongoing development of tools for fake video detection and face spoof detection alongside with the ongoing shift for privacy and data security in major IT companies.

Slightly off-topic, there’s a new Frontline documentary on AI. It’s 2-hours, so it’s a commitment. It doesn’t really go into the GAN-side of artificial intelligence, but it does discuss automation, privacy, and surveillance.

In the Age of AI FRONTLINE, from PBS. [1:54:16]

The documentary provides many reasons to be afraid of AI, particularly with regard to surveillance and use of AI by governments. We can’t really predict what governments will do, but if behavior control is a goal of AI there’s a natural user group: people who have trouble controlling their behavior. This would be people who have or have had issues or struggles with:

- substance abuse

- memory loss

- chemical imbalances in the brain

- adhd

- neurological damage

- loss of motor control

Or even just reminding people to eat better and go outside more. I’m sure there are more. Anyway, that’s my thought. Seems fair to be afraid, but also there are some opportunities that shouldn’t be overlooked.

And now on the cultural side : the trailer for Her. I don’t know if it’s possible to have an OS this advanced, but there are some interesting fantasy explorations in this movie.